Container deployment pipeline with AWS Fargate and GitHub actions - complete guide

How to set up a container deployment pipeline with AWS Fargate and GitHub actions

There are many tutorials online and scattered documentation on how to do this, but not a single guide you can follow from start to finish, so here you have an attempt to do that.

Requirements

For this to work, you will need a few things set up first, some of them won’t be covered entirely because we want to focus on the AWS Fargate deployment pipeline.

1. AWS CLI

We’ll use the AWS CLI to set up some of the requirements, so follow the installation and set up guide here

2. A IAM user with the right permissions

This is very important and sometimes not easy to get right.

- Open the IAM console

- Go to roles on the left

- Go to ecsTaskExecutionRole (if it’s not present, follow this guide to create it)

- Add inline policy, select

JSON - Paste the following

JSONon it, replace${YOUR IAM}with your IAM and${YOUR_REGION}with your region

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": ["kms:Decrypt", "secretsmanager:GetSecretValue"],

"Resource": [

"arn:aws:secretsmanager:${YOUR_REGION}:${YOUR IAM}:secret:secret_name",

"arn:aws:kms:${YOUR_REGION}:${YOUR IAM}:key/key_id"

]

}

]

}

- Name the policy and create it

- Make sure your IAM has access to ECS and ECR

3. A GitHub repository and project with a Docker image

Let’s say you have a GitHub NodeJS repository with the following files

$ ls -la

-rw-r--r-- 1 user 12653 feb 01 15:02 index.js

-rw-r--r-- 1 user 12653 feb 01 15:02 package.json

-rw-r--r-- 1 user 12653 feb 01 15:02 Dockerfile

And the contents of the index file

$ cat index.js

const express = require('express')

const app = express()

const port = 3000

app.get('/', (req, res) => res.send('Hello World!'))

app.listen(port, () => console.log(`Example app listening on port ${port}!`))

For the Dockerfile, we’re going to have the simplest example as well

# Docker image

FROM node:13.6.0

# Working directory

WORKDIR /usr/src/app

# Copy everything

COPY package*.json ./

# Install app dependencies

RUN npm install

# Copy source

COPY . .

# Port

EXPOSE 3000

# Run

CMD ["node", "index.js"]

The workflow

Now let’s add the GitHub actions starter workflow template that tells us what we need to do and that we can find in the AWS GitHub actions repository and follow the instructions

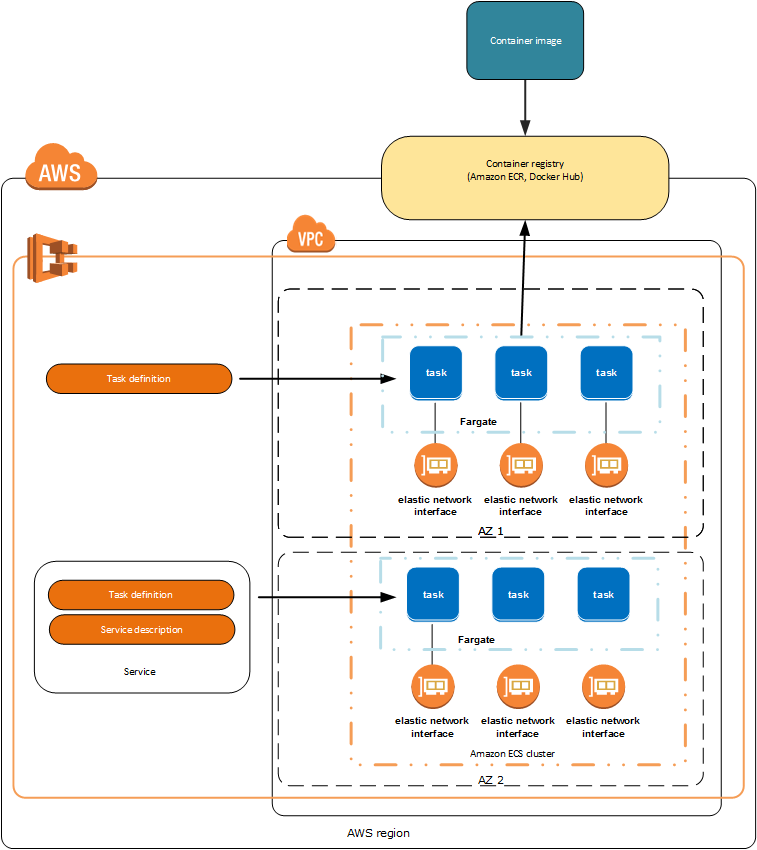

The following workflow will build and push a new container image to Amazon ECR, and then will deploy a new Task Definition to Amazon ECS, on every push to the master branch, the task will pull the docker image from the amazon registry, called ECR, and deploy it.

Create a new folder called .github/workflows in your project root and add this file on it as aws.yml

on:

push:

branches:

- master

name: Deploy to Amazon ECS

jobs:

deploy:

name: Deploy

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v2

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: us-east-2

- name: Login to Amazon ECR

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

- name: Build, tag, and push image to Amazon ECR

id: build-image

env:

ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

ECR_REPOSITORY: test-repo

IMAGE_TAG: ${{ github.sha }}

run: |

# Build a docker container and

# push it to ECR so that it can

# be deployed to ECS.

docker build -t $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG .

docker push $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

echo "::set-output name=image::$ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG"

- name: Fill in the new image ID in the Amazon ECS task definition

id: task-def

uses: aws-actions/amazon-ecs-render-task-definition@v1

with:

task-definition: test-task.json

container-name: test-task

image: ${{ steps.build-image.outputs.image }}

- name: Deploy Amazon ECS task definition

uses: aws-actions/amazon-ecs-deploy-task-definition@v1

with:

task-definition: ${{ steps.task-def.outputs.task-definition }}

service: test-service

cluster: test-cluster

wait-for-service-stability: true

Note: The task-definition field in the task-def section of the workflow above has to match the name of the task-definition file from the next step.

To use this workflow, you will need to complete the following set-up steps:

1. Create an ECR repository to store your images.

For example: aws ecr create-repository --repository-name test-repo --region us-east-2. Replace the value of ECR_REPOSITORY in the workflow above with your repository’s name.

Replace the value of aws-region in the workflow above with your repository’s region.

An ECR repository is a place to store your container images on AWS.

2. Create an ECR Task Definition

The Task Definition is, amongst other things, the way AWS runs your container. There’re dozens of configuration options you can set, but the minimal configuration example we’re going to use looks like this

{

"requiresCompatibilities": ["FARGATE"],

"executionRoleArn": "arn:aws:iam::${YOUR_IAM}:role/ecsTaskExecutionRole",

"containerDefinitions": [

{

"name": "test-task",

"image": "{$YOUR_IAM}.dkr.ecr.${YOUR_REGION}.amazonaws.com/test-repo:latest",

"cpu": 128,

"memory": 128,

"portMappings": [

{

"containerPort": 3000,

"hostPort": 3000,

"protocol": "tcp"

}

],

"essential": true,

"interactive": true,

"pseudoTerminal": true

}

],

"family": "test-task",

"networkMode": "awsvpc",

"cpu": "256",

"memory": "512",

"volumes": []

}

Replace {$YOUR_IAM} with your IAM, which is generally a number identifying your account and ${YOUR_REGION} with your region, then store it in a file called test-task.json in the root for your repository

3. Create and configure the ECS cluster

- Head to the clusters view in the AWS console and create a new cluster

- Choose Networking only for Fargate configuration, if you choose any of the EC2 options you will have to create a new cluster if you want automatic resource management by default with Fargate, then press on Next step

- Set the cluster name (for this example it will be test-cluster)

- Optionally create a new VPC if you don’t have one already and press on Create

Then, you can go to the clusters view on the left, and see your newly created Fargate cluster.

4. Create a task definition for the service

When you deploy to master and the Task definition is added and set in the service and then run in AWS, it will update the task in the service automatically for you, but this step is required to create the service, since you need to provide a task definition.

- Go to the console and the ECS section

- On the left select Task Definitions

- Create new Task Definition

- Choose FARGATE and Next step

- Set name to test-task, role to ecsTaskExecutionRole, same for Task execution IAM role

- Task memory and CPU to the minimum

- Set up the container, which should be similar to the

JSONfile for the task definition - Press on Create

5. Create and configure a service in the cluster

Now, select the cluster you just created from the list of clusters (test-cluster), and create a new service, a service is like a deployment that runs Tasks, a task is for example, pulling a container image, building it and running it.

- Press on Create

- Set the Task definition to the one you created in the previous step and want to run, which in our case it’s specified in the test-task.json file, under “family”

- Set the service name which in our case it’s test-service

- Set the number of tasks, which should be 1 for now

- Leave other parameters as-is and press Next step

- Select the cluster VPC which is the Virtual Private Cloud, could choose the default one or if you created one with the cluster, select that one

- The subnets, should choose at least one, i.e.

us-east-2a - The security groups, for a test cluster you could set up a permissive group, you can change the security group’s permissions later on from the security groups administration in AWS

- Leave the rest of the parameters as-is and press Next step

- Do not adjust the service’s desired count for now and press Next step

- Check that everything is alright and confirm the service with Create service

NOTE: Additionally, you can follow the Getting Started guide on the ECS console

Now that you have created a service and a cluster you can add them to the workflow aws.yml file.

6. Store an IAM user access key and secret in the repository’s GitHub Actions secrets

Go to the GitHub repository hosting the project and go to Settings -> Secrets and add 2 keys with their corresponding values: AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY.

You can find the information on how to get those if you don’t have them already here

7. Test it

Now that you’ve followed all the steps, you can commit your changes and go to the GitHub repository and navigate to the Actions section to watch the build process and see if it deployed correctly.

If there’s any issue, you can try adding an additional secret to the repository: ACTIONS_STEP_DEBUG with value true which will give you additional information of everything going on in each step of the workflow for easier debugging.

Finally, go to the Task in the console, and you can see the external IP address and check out your service.

- Go to the ECS section on the AWS console

- Select Clusters on the left

- Select the test-cluster

- Select the test-service

- Switch to the Tasks tab

- Select the task, which should be RUNNING and you will find the Public IP

- Navigate to the public IP in your browser and you should see

Hello World!